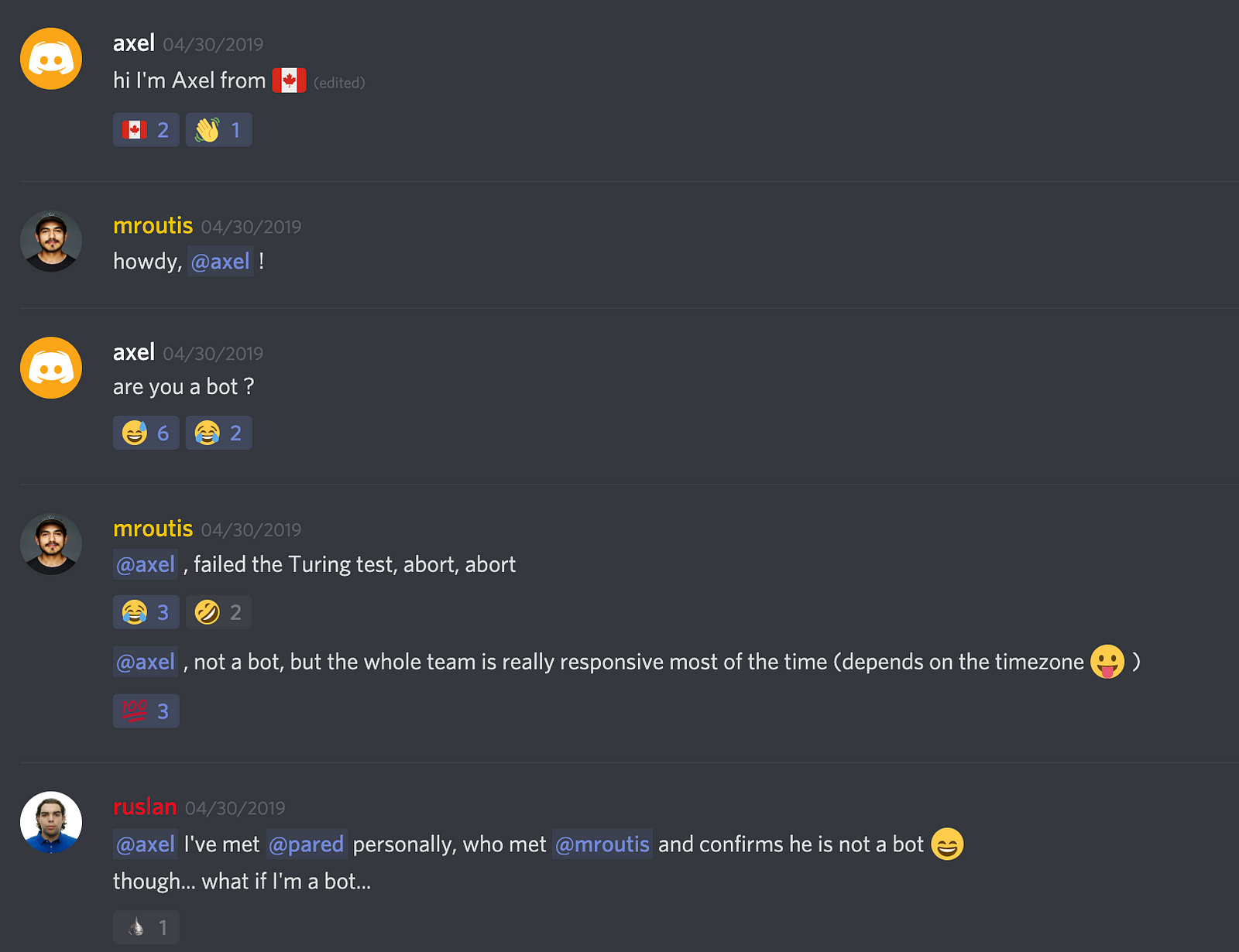

DVC Discord gems

There are lots of hidden gems in our Discord community discussions. Sometimes they are scattered all over the channels and hard to track down.

We will be sifting through the issues and discussions and share the most interesting takeaways.

There is no separate guide for that, but it is very straight forward. See DVC file format description for how dvc file looks inside in general. All dvc add or dvc run does is just computing md5 fields in it, that is all. You could write your dvc file and then run dvc repro that will run a command(if any) and compute all needed checksums … read more

…There’s a ton of code in that project, and it’s very non-trivial to define the code dependencies for my training stage — there are a lot of imports going on, the training code is distributed across many modules … read more

DVC officially only supports regular Azure blob storage. Gen1 Data Lake should be accessible by the same interface, so configuring a regular azure remote for dvc should work. Seems like Gen2 Data Lake has disable blob API. If you know more details about the difference between Gen1 and Gen2, feel free to join our community and share this knowledge.

Apache 2.0. One of the most common and permissible OSS licences.

- Setting up S3 compatible remote ( Localstack , wasabi )

$ dvc remote add upstream s3://my-bucket

$ dvc remote modify upstream region REGION_NAME

$ dvc remote modify upstream endpointurl <url>

Find and click the S3 API compatible storage on this page

… it adds your datafiles there, that are tracked by dvc, so that you don’t accidentally add them to git as well you can open it with file editor of your liking and see your data files listed there.

… with dvc, you could connect your data sources from HDFS with your pipeline in your local project, by simply specifying it as an external dependency. For example let’s say your script process.cmd works on an input file on HDFS and then downloads a result to your local workspace, then with DVC it could look something like:

$ dvc run -d hdfs://example.com/home/shared/input -d process.cmd -o output process.cmd

… read more.

If you have any questions, concerns or ideas, let us know!